What is a good neural network?

There are two criteria that will be decisive, depending on the objective we set :

- Either we try to make the neural network more efficient , and we will want to improve its precision rate to maximize the right answers. We will therefore try to obtain a neuron network with the highest accuracy rate.

- Either the precision rate is satisfactory, but we want to reduce the size of the neural network , to be able to embed it in IoT for example. So we want to reduce the number of parameters (number of neurons per layer, and number of connections between them), to the maximum.

Evidemment, il n’est pas facile d’atteindre un objectif sans que cela fasse bouger l’autre critère. Si on réduit le nombre de neurones, on va souvent voir le taux de précision chuter. Et si on augmente la précision, cela se fait souvent en ajoutant des paramètres…

Build the best neural network

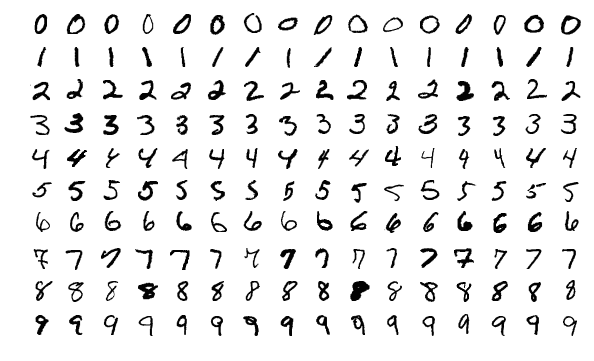

There is a platform called Kaggle that offers tools and competitions for datascientists. One of these contests is called MNIST and the object is to build a network of neurons capable of recognizing handwritten numeric characters.

Hundreds of datascientist compete in these contests and when a champion emerges, we touch the state of the art in terms of neural network model. That champion is the best neural network built by a human being to date. But could a "non-human intelligence" do better?

Optimize a neural network with NNTO

NNTO is a SAAS platform that optimizes neural networks through genetic mutation algorithms. No need to provide your data. Optimization is done on the topology of the neural network. Mutants are generatedthey are selected according to their results to reach the chosen objective.

So we took a MNIST Kaggle champion in order to benchmark, et nous avons réussi à l’améliorer grâce à NNTO. Comme l’enjeu est de montrer la pertinence du modèle dans ce contexte, nous avons décidé d’utiliser les données d’origine du repo de KERAS without any modifications.We evaluated the champion's score with this data, which gave us a score base (a bit below the one announced by the champion's author). All our model optimizations exceeded the calculated score. In addition, our best champion exceeded the score of the author:

[embedyt] https://www.youtube.com/watch?v=7ynU7jvJzBM[/embedyt]

NNTO has managed to improve the neuron champion network in two ways with:

- A more efficient neural network : 99.50% after 10 generations of mutants (implying an 8% decrease in error) compared to the original model.

- A lighter neural network : a parameter reduction of 76% at 175K with another mutant.

Optimize your neural network to break a deadlock

When the results are not there, it is sometimes very difficult to know where the problem comes from. Does this come from the neural network model? Does the problem come from the data? Without the ability to clearly identify the cause, solving the problem is almost impossible, and despite the hours of work invested, we remain stuck in this rut.

The optimization of the neural network with NNTO makes it possible to get out of this impasse. By mutating the model, one solves the problem if the model of the neural network was responsible for it. And if, despite the number of mutations, we are still faced with the lack of results, then, we know for sure that the problem lies with the data. So we can work towards a solution.

If you're curious about the details of the experience, everything is available . And your are welcome to test our mutant neural networks , do not hesitate!